the agi dream keeps missing the point

intelligence isn’t some benchmark. it’s a social process.

I’m going to focus here on what I see, as a neuroscientist, is a/the deep structural and category error at the heart of most current AGI efforts: the idea intelligence is centralised, all we need is more data, tweaks to the algorithms, and lo, Skynet will be upon us (and, maybe, do for us, too).

And if not Skynet, it might be Legion that does for us (if you can remember the plot of the third-best Terminator sequel): AGI is inevitable - no matter what we do - is the subtext of this one - destroy Skynet, and never mind, cos’ Legion is happening anyway.

The vibes and feels from Hollywood and the tech-bro elite is of AGI’s inevitability. I don’t think AGI is inevitable, at least not until we start working from reasonable theories of human and animal cognition and their underlying neurophysiology and of how brains have been shaped to adapt by aeons of evolution.

Evolution is cleverer than any engineer. And intelligence, as it exists in the wild, is messier, richer, and more context-dependent than anything we are currently building. That doesn't mean progress is impossible, but it does mean we should be cautious about centralised dreams of artificial omniscience.

One caveat: I think brute-force, big data, pattern matching, machine learning, supervised (human in the loop) reinforcement learning, and other approaches will certainly continue to yield hugely impressive results, but these approaches won't be intelligence in the sense we usually understand it.

And, to be clear, I’m not saying this tech is useless! It’s not, and lots of other people are using it - an obvious example is the assist it gives on coding. I’m simply saying it's not AGI, and our current ‘text prediction with vectors and loads of compute’ path won't take us to AGI.

Some other path might get us there: we got flight ✈️ once we stopped trying to emulate birds and flappy wings and tried other ways of getting airborne.

Drawing on lessons from biology, economics, and cognitive neuroscience, I argue the pursuit of artificial general intelligence through ever-larger language models is not just technically questionable; it’s conceptually incoherent: scaling text prediction through ever-larger models is not intelligence (whatever else it might be).

This is not the path intelligence has taken in evolution, nor how intelligence functions in human societies. We humans are much cleverer together - this is why we alone of all the species on the planet organise deliberative assemblies- and our machines will need to be clever together as well, if they want to mimic human intelligence (more in my book ‘Talking Heads’ - US Kindle Ed available here).

the misaligned path to agi

centralised intelligence is a philosophical and biological error

The pursuit of artificial general intelligence (AGI) is increasingly framed as a technical arms race: whoever trains the biggest model on the most data, wins. The dominant vision is one of centralisation: gathering all the world’s text, code, speech, and images into a singular model capable of general cognition.

This is not just an engineering gamble: it’s a philosophical misstep and a scientific category error. Intelligence does not emerge from centralisation: it arises through distributed processes (biological, cultural, social) shaped by evolution and interaction with a hostile environment where danger and death is everywhere and ever present.

AGI, as currently conceived, misunderstands the nature of intelligence. And because it misunderstands it, it builds systems that are not general, not adaptive, and not intelligent in any meaningful sense. These systems don’t have models of the world: they know nothing of mental timelines, with past, present, and future ordered by distributed brain systems co-supporting imagination and space, as well as memory, driven by evolution in all sorts of directions.

See also:

knowledge is distributed, not downloadable

Friedrich Hayek, in his landmark essay The Use of Knowledge in Society, argued that knowledge in an economy is not centrally held. It is local, tacit, and constantly changing. Central planners fail not through lack of effort, but because they misunderstand the social structure of the adaptive use of knowledge itself.

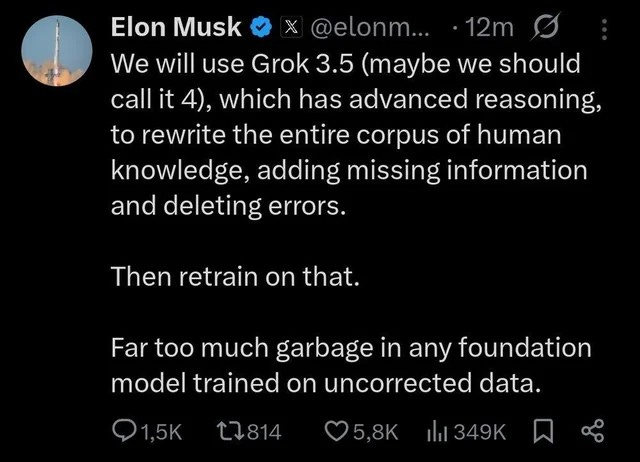

And this is precisely the error in contemporary AGI efforts: the assumption is knowledge is a stockpile: quantifiable, explict, extractable, compressible, and legible.

Stop and consider: what if it's not?

(Sidenote: is this why he hates Wikipedia? A crowd-sourced collective endeavour showing that pooling and testing what we think we know according to public criteria is superior to Grok? Hmm!)

The brain is not a passive container for facts: it is a predictive, self-organising, system that builds generative models of the world based on sparse input and intrinsic dynamics. Its activity is not driven by raw data ingestion, but by a continuous interaction between internal priors and sensory evidence.

This is why a child can acquire language from limited exposure to a language community, while a large language model needs terabytes of training data and still doesn't understand what it is saying (nor count the number of b's in strawberry or blueberry or know why you can’t melt an egg - unless it’s made of chocolate, or whatever 😉).

AGI systems built on data centralisation reproduce the central planner’s mistake: assuming more data will produce better understanding and prediction. But understanding is not data accumulation: understanding is the result of dynamic inference in an environmental and social context.

“Through extensive experimentation across diverse puzzles, we show that frontier LRMs face a complete accuracy collapse beyond certain complexities. Moreover, they exhibit a counter-intuitive scaling limit: their reasoning effort increases with problem complexity up to a point, then declines despite having an adequate token budget. By comparing LRMs with their standard LLM counterparts under equivalent inference compute, we identify three performance regimes: (1) low-complexity tasks where standard models surprisingly outperform LRMs, (2) medium-complexity tasks where additional thinking in LRMs demonstrates advantage, and (3) high-complexity tasks where both models experience complete collapse. We found that LRMs have limitations in exact computation: they fail to use explicit algorithms and reason inconsistently across puzzles.”

LRM: large-reasoning model. The lesson: if you want to break these models, choose tacit or implict problems humans find easy to solve, difficult to describe, and for which there is only sparse or better stll no text to scrape on the public internet. The models will generate a word salad 🥗, but that's it. Problems that can be solved only through learning by doing are good examples: motor skills, recognising bullshit, reading a room, and so many more.